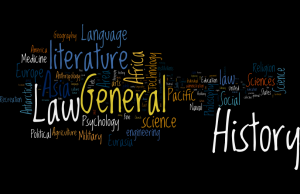

As I’ve been working through the many complications of finding, downloading, cleaning, uploading, and analyzing my data set, I took a moment to create the above word cloud using Wordle which itself was a little complicated as it requires a Java Plugin that is no longer supported on any of the computers I’ve been using today. After visiting three browsers and two computers to finally have access to adding the plugin (since I cannot easily download or run updates on my work computers due to ITS protocol), I made the above from a list of Subject Headings provided by the Library of Congress. I actually used a spreadsheet found here. When working with data, it seems even the simplest of tasks come with many complications! Regardless, I am not deterred!

The word cloud represents one aspect of what I am trying to look at with my data – a set of call numbers from the McEngegart Hall Library’s Reference Collection at St. Joseph’s College, Brooklyn. Call numbers have a direct relationship to their subject headings, so I will be using a data set of call numbers and their subject headings which I will use to determine if there is a fair representation of monographs, based on their call numbers and associated subject headings, in the library’s collection for each of the academic programs offered at the College.

Initially, I wanted to look at the entire print collection, but as I began to pull the data, I thought it might be too big (68,794 lines in Excel) of a set (at least for the time being since I plan to use the larger set if I can get the subset to make sense). So, I’ve chosen to look at the reference collection (1,926 lines in Excel) as a subset, which may offer some insight, but I believe isn’t the best representation of the collection. First and foremost because of the nature (and cost) of reference collections, my library has stopped adding paper bound titles and relies more heavily on online databases (whose titles are not represented here), so there will be many gaps. Also, this past summer, we moved several of the reference titles into the circulating collection to give them a better opportunity to be found while students are browsing the collection. Nonetheless, not an ideal representation, but a start!

Why would I want to know about this aside from having to pick a data set to work with?? Many academic libraries have to defend their need for the large spaces they take up on campus and as many libraries before mine have had to accept, we are losing a quarter of our space to new classroom labs, so I’ve been asked to condense the collection which required hours of measuring and project planning. For now, I at least do not have to remove any books from the collection (and there’s a little room for growth). Taking a look at the collection by analyzing the representation of items through their call numbers and subject headings seems to be an interesting way of interpreting the collection in addition to trying to work out the gaps in the collection and see where there may be too much in any particular area. As I was in the stacks measuring–and I’ve known this for quite some time–there’s a great need for weeding the collection (library speak for removing titles that are superfluous to the collection and do not support the users’ needs).

I know this project won’t change the way library collections are seen on the macro-scale, but it will help define and defend my library’s collection. I have been asked by two administrators in the last few years if I actually think physical books belong in a modern library. This is not a question I love being asked as I believe there is still a great need for physical materials, but I think with this project, I can at least counter these inquiries with some impressive data and hopefully some nice visualizations. It is worth mentioning here that I wanted to start with an open data set available through NYC’s Open Data portal. I was inspired for this project by a data set listing Brooklyn Public Library’s Collection uploaded to the portal, but I wanted to work with something more directly related to my day to day work (not that I don’t love public libraries and all that they do for this city!).

Pulling the Data:

I thought this would be the very easiest part, and I was right! With a simple query in an Access Database, I was able to pull call numbers and other bibliographic data that I could export to Excel. As already mentioned, I planned to use the entirety of the collection as a set, but have since decided to just start with the reference collection subset data. I came to this decision after grappling with time constraints on cleaning the data.

Cleaning the Data:

I thought this would be a difficult part of the project and I am finding that to be true. First, LoC Call numbers do not sort very nicely (read: quickly) in Excel as a result of their alphanumeric configurations. I did find some work arounds and am still experimenting with some of my options. Another tool I’d like to look into using is OpenRefine. Second, I also have to remove several of the duplicates due to multiple volumes of one title (or decide if that’s the right thing to do). I also need to pull and add data on the subject headings that are assigned to each call number. I am still working out how best to do this: a) do I rerun a query in Access to include assigned subject headings using the MARC records or b) do I work out a way to assign a subject heading based on the call number of the item (the latter could potential be achieved by writing a Python script to assign subject headings based on a call number range). I still need to experiment with both of these possibilities.

Data Tools to Use:

I intend to first experiment with relational analysis tools listed on DIRT (DIgital Research Tools): http://dirtdirectory.org/tadirah/relational-analysis. Also, I still have to work out how to draw a correlation between the volume of subject headings represented in the collection to the academic programs. By taking a look at the programs offered, I will need to determine how these programs can be looked at in terms of subject headings. Do I develop a list of each program and then determine which subject heading(s) would be of use to this program? For example, Child Study is a major at the college and one such LoC heading and its corresponding call number range is: “Education — Theory and practice of education — Child study / LB1101-1139” but there are several other headings too.

Conclusions:

So, I still have a lot of work to be done and many more questions to be asked & answered. I think two complementary projects for this first project would be to look at usage statistics for the titles in the collection. Once I know what is in the collection and how it represents the programs, I can compare that to what students are actually using. What are the students searching for in the catalog and what books are they borrowing? Also an important outgrowth of this initial analysis of paper materials would be looking at data from the library’s various ebook collection subscriptions, such as title lists from each database and what titles have disappeared over the years of subscribing. With these additional points of data, an even greater holistic picture could be drawn. Since Michelle’s presentation on maps a few weeks ago was so thought provoking, I would also like to include some physical representation of the collection using a catalog addition such as LibraryThing’s or StackMap since GPS coordinates wouldn’t work here.

Scope creep is becoming extremely apparent as I wrap up this post and realize that I have talked about a variety of tools I want to work with and tangential data sets that can be looked at and I am starting to feel like I’m overreaching–especially for a project that I do not feel will have some lofty impact on the library world. However, this data set will change my day to day work world a little and that’s worth it.

Update as of 11/9/16: – I came across this article in my work inbox: http://crl.acrl.org/content/77/6/765.full.pdf+html I plan to use it as a model to further my own project.